MansBrand.com Articles Provided as noted by attribution.

In February of last year, the San Francisco-based research lab OpenAI announced that its AI system could now write convincing passages of English. Feed the beginning of a sentence or paragraph into GPT-2, as it was called, and it could continue the thought for as long as an essay with almost human-like coherence.

Now, the lab is exploring what would happen if the same algorithm were instead fed part of an image. The results, which were given an honorable mention for best paper award at this week’s International Conference on Machine Learning, open up a new avenue for image generation, ripe with opportunity and consequences.

At its core, GPT-2 is a powerful prediction engine. It learned to grasp the structure of the English language by looking at billions of examples of words, sentences, and paragraphs, scraped from the corners of the internet. With that structure, it could then manipulate words into new sentences by statistically predicting the order in which they should appear.

So researchers at OpenAI decided to swap the words for pixels and train the same algorithm on images in ImageNet, the most popular image bank for deep learning. Because the algorithm was designed to work with one-dimensional data, i.e.: strings of text, they unfurled the images into a single sequence of pixels. They found that the new model, named iGPT, was still able to grasp the two-dimensional structures of the visual world. Given the sequence of pixels for the first half of an image, it could predict the second half in ways that a human would deem sensible.

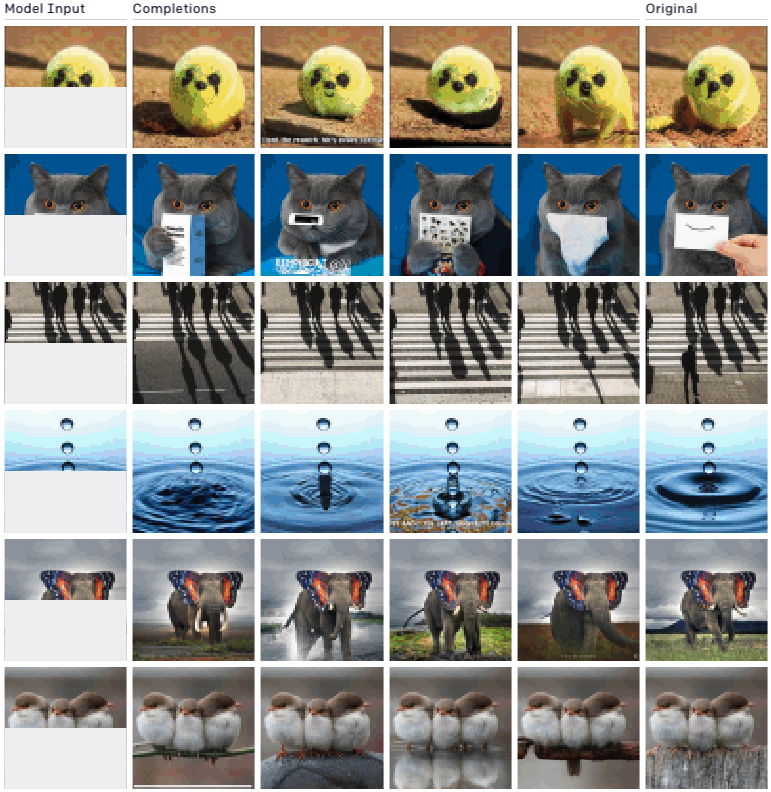

Below, you can see a few examples. The left-most column is the input, the right-most column is the original, and the middle columns are iGPT’s predicted completions. (See more examples here.)

OPENAI

The results are startlingly impressive and demonstrate a new path for using unsupervised learning, which trains on unlabeled data, in the development of computer vision systems. While early computer vision systems in the mid-2000s trialed such techniques before, they fell out of favor as supervised learning, which uses labeled data, proved far more successful. The benefit of unsupervised learning, however, is that it allows an AI system to learn about the world without a human filter, and significantly reduces the manual labor of labeling data.

The fact that iGPT uses the same algorithm as GPT-2 also shows its

————

By: Karen Hao

Title: OpenAI’s fiction-spewing AI is learning to generate images

Sourced From: www.technologyreview.com/2020/07/16/1005284/openai-ai-gpt-2-generates-images/

Published Date: Thu, 16 Jul 2020 13:59:09 +0000

Did you miss our previous article…

https://www.mansbrand.com/twitter-has-blocked-all-tweets-from-verified-accounts-after-a-massive-security-breach/

The post OpenAI’s fiction-spewing AI is learning to generate images appeared first on MansBrand.

from MansBrand https://www.mansbrand.com/openais-fiction-spewing-ai-is-learning-to-generate-images/?utm_source=rss&utm_medium=rss&utm_campaign=openais-fiction-spewing-ai-is-learning-to-generate-images

via IFTTT

No comments:

Post a Comment